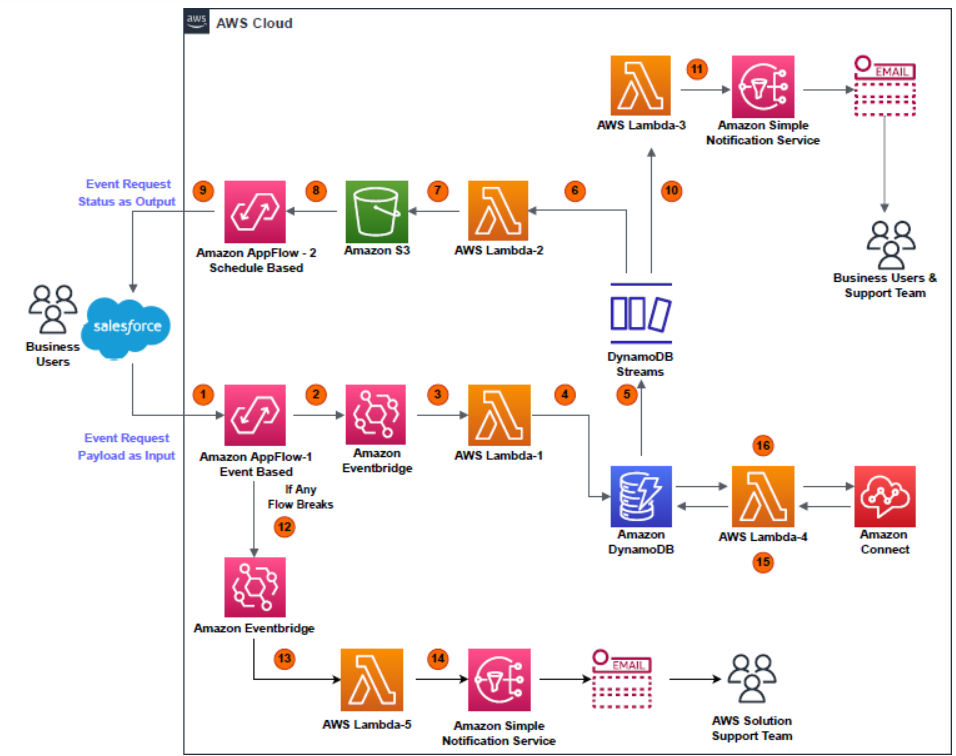

The architecture described in this blog post is intended to improve the efficiency of updating configuration data in a DynamoDB table by allowing authorized business users to make updates directly from Salesforce, rather than having to go through a manual process involving creating a support ticket.

To integrate salesforce with aws DynamoDB , the architecture makes use of two Amazon Web Services (AWS) products: Amazon AppFlow and Amazon EventBridge. These services can be used to integrate Salesforce Lightning with DynamoDB in a bi-directional manner, allowing updates to be made in either direction.

Amazon AppFlow , a fully managed integration service which enables data transfer between SaaS applications, such as Salesforce, and cloud storage or databases, such as DynamoDB. It can be used to easily set up data flows between these systems, allowing data to be transferred and transformed in real-time.

Amazon EventBridge is a fully managed event bus which makes it easy to connect applications together using data from your own applications, SaaS applications, and AWS services. It can be used to send data from one application to another, or to trigger an action in another application in response to an event.

By using these two services together, it is possible to build an event-driven, serverless-based microservice that allows authorized business users to update configuration data in DynamoDB directly from the Salesforce screen, without requiring access to the AWS Command Line Interface or AWS Management Console. This can help, streamline the process of updating configuration data and improve the efficiency of the contact center application.

Steps to integrate salesforce lightning

Here is a high-level overview of the steps you might follow to integrate Salesforce Lightning with DynamoDB using Amazon AppFlow and Amazon EventBridge:

Step 1 : Setup AWS and DynamoDB

Set up your AWS account and create a DynamoDB table to store your configuration data.

Step 2 : Salesforce account and Salesforce Lightning application

Set up your Salesforce account and create a Salesforce Lightning application to manage your configuration data.

Step 3 : Set up Amazon AppFlow

Set up Amazon AppFlow by creating a flow that transfers data between Salesforce and DynamoDB. You will need to specify the source and destination for the data, as well as any transformations or mapping that need to be applied to the data.

To set up Amazon AppFlow and create a flow that transfers data between Salesforce and DynamoDB, you will need to follow these steps:

With AWS Management Console :

- Go to the Amazon AppFlow homepage in the AWS Management Console and sign in to your account.

- In the AppFlow dashboard, click the “Create flow” button.

- On the “Select source and destination” page, choose Salesforce as the source and DynamoDB as the destination.

- On the “Configure source” page, select the Salesforce object that you want to use as the source for the data transfer. You can also choose to filter the data by specifying a query or a specific record.

- On the “Configure destination” page, select the DynamoDB table that you want to use as the destination for the data transfer.

- On the “Map fields” page, you can specify any transformations or mapping that need to be applied to the data as it is transferred from Salesforce to DynamoDB. You can use the mapping editor to drag and drop fields from the source to the destination, or you can use the expression editor to specify more complex transformations.

- On the “Schedule” page, you can specify the frequency at which the flow should run, as well as any advanced options such as retry behavior or error handling.

- Review the flow settings and click “Create flow” to complete the setup process.

- Once the flow is set up, Amazon AppFlow will automatically transfer the data between Salesforce and DynamoDB according to the schedule you specified. You can monitor the status of the flow and view any errors or issues in the AppFlow dashboard.

With AWS CLI :

- Create a new flow using the create-flow command:

aws appflow create-flow \

--name "My Salesforce to DynamoDB Flow" \

--description "This flow transfers data from Salesforce to DynamoDB" \

--source "Salesforce" \

--destination "DynamoDB"

- Specify the source connector for the flow using the create-connector-profile command:

aws appflow create-connector-profile \

--connector-profile-name "SalesforceConnectorProfile" \

--flow-name "My Salesforce to DynamoDB Flow" \

--connector-type "Salesforce" \

--connection-mode "Private" \

--credentials '{"username":"myusername","password":"mypassword","securityToken":"mysecuritytoken"}'

- Specify the destination connector for the flow using the create-connector-profile command:

aws appflow create-connector-profile \

--connector-profile-name "SalesforceConnectorProfile" \

--flow-name "My Salesforce to DynamoDB Flow" \

--connector-type "Salesforce" \

--connection-mode "Private" \

--credentials '{"username":"myusername","password":"mypassword","securityToken":"mysecuritytoken"}'

- Specify the destination connector for the flow using the create-connector-profile command:

aws appflow create-connector-profile \

--connector-profile-name "DynamoDBConnectorProfile" \

--flow-name "My Salesforce to DynamoDB Flow" \

--connector-type "DynamoDB" \

--connection-mode "Private" \

--credentials '{"accessKeyId":"myaccesskey","secretAccessKey":"mysecretaccesskey"}'

- (Optional) If you need to apply transformations or mapping to the data being transferred, you can specify them using the create-flow-execution-property command:

aws appflow create-flow-execution-property \

--flow-name "My Salesforce to DynamoDB Flow" \

--property-key "Transformations" \

--property-value '{"field1": {"operation": "set", "value": "newvalue"}}'

Start the flow using the start-flow command:

aws appflow start-flow \

--name "My Salesforce to DynamoDB Flow"

This will set up the flow to transfer data from Salesforce to DynamoDB, applying any specified transformations or mapping along the way. You can monitor the progress of the flow using the describe-flow-execution-records command.

Step 4 : Set up Amazon EventBridge

Set up Amazon EventBridge by creating an event bus and defining the events that should trigger data transfer between Salesforce and DynamoDB. You can also specify any rules that should be applied to filter or transform the data before it is transferred.

With AWS Management Console :

To set up Amazon EventBridge using the AWS Management Console, you will need to perform the following steps:

- Sign in to the AWS Management Console and navigate to the Amazon EventBridge page.

- Click the “Create event bus” button.

- Enter a name for the event bus and click the “Create event bus” button.

- Click the “Create rule” button.

- Enter a name for the rule and select the “Event pattern” option.

- In the “Event pattern preview” field, enter a JSON object that defines the events that should trigger data transfer between Salesforce and DynamoDB. For example:

{

"source": [

"aws.appflow"

],

"detail-type": [

"AppFlow Export Succeeded"

]

}

- Click the “Create rule” button.

- (Optional) If you want to apply additional filtering or transformation to the data, you can specify a target for the rule by clicking the “Add target” button and selecting a target type (e.g., AWS Lambda, Amazon SNS).

This will set up Amazon EventBridge to trigger data transfer between Salesforce and DynamoDB whenever an AppFlow Export Succeeded event is received on the MyEventBus event bus. The data will be filtered or transformed according to the rules defined in the MyRule rule, and any additional targets specified for the rule. You can monitor the progress of the data transfer by inspecting the events received on the event bus and the results of any targets that were triggered.

With AWS CLI :

- Create a new event bus using the create-event-bus command:

aws events create-event-bus \

--name "MyEventBus"

- Define the events that should trigger data transfer between Salesforce and DynamoDB by creating an event pattern that matches the desired events:

event_pattern='{

"source": [

"aws.appflow"

],

"detail-type": [

"AppFlow Export Succeeded"

]

}'

- Create a new rule to filter or transform the data before it is transferred using the put-rule command:

aws events put-rule \

--name "MyRule" \

--event-pattern "$event_pattern"

- (Optional) If you want to apply additional filtering or transformation to the data, you can specify a target for the rule using the put-targets command:

aws events put-targets \

--rule "MyRule" \

--targets '{

"Id": "MyTarget",

"Arn": "arn:aws:lambda:us-west-2:123456789012:function:MyLambdaFunction",

"Input": "{\"field1\": \"$.detail.field1\"}"

}'

This will set up Amazon EventBridge to trigger data transfer between Salesforce and DynamoDB whenever an AppFlow Export Succeeded event is received on the MyEventBus event bus. The data will be filtered or transformed according to the rules defined in the MyRule rule, and any additional targets specified for the rule. You can monitor the progress of the data transfer by inspecting the events received on the event bus and the results of any targets that were triggered.

Step 5: Test the integration

Test the integration by making updates to the configuration data in either Salesforce or DynamoDB and verifying that the changes are reflected in the other system.

With AWS Management Console:

To test the integration between Salesforce and DynamoDB using the AWS Management Console, you will need to perform the following steps:

- Sign in to the AWS Management Console and navigate to the Amazon AppFlow page.

- Locate the flow that you set up to transfer data between Salesforce and DynamoDB and verify that it is active.

- In Salesforce, make updates to the configuration data that you want to transfer to DynamoDB.

- In the AWS Management Console, navigate to the Amazon DynamoDB page and verify that the updates to the configuration data have been reflected in the DynamoDB table.

- Alternatively, you can also make updates to the configuration data in DynamoDB and verify that the changes are reflected in Salesforce.

This will confirm that the integration between Salesforce and DynamoDB is working as expected and that data is being transferred correctly between the two systems.

With AWS CLI:

To test the integration between Salesforce and DynamoDB using the AWS CLI, you will need to perform the following steps:

- In Salesforce, make updates to the configuration data that you want to transfer to DynamoDB.

- Use the describe-flow-execution-records command to check the status of the flow and verify that data is being transferred from Salesforce to DynamoDB:

aws appflow describe-flow-execution-records \

--name "My Salesforce to DynamoDB Flow"

- Use the scan command to retrieve the data from the DynamoDB table and verify that the updates made in Salesforce are reflected in the table:

aws dynamodb scan \

--table-name "MyTable"

Alternatively, you can also make updates to the configuration data in DynamoDB and verify that the changes are reflected in Salesforce.

This will confirm that the integration between Salesforce and DynamoDB is working as expected and that data is being transferred correctly between the two systems.

Step 6 : Deploy the integration

Deploy the integration to production and make it available to authorized business users.

With AWS Management Console :

To deploy the integration between Salesforce and DynamoDB to production using the AWS Management Console, you will need to perform the following steps:

- Sign in to the AWS Management Console and navigate to the Amazon AppFlow page.

- Locate the flow that you set up to transfer data between Salesforce and DynamoDB and verify that it is active.

(Optional) If you have not already done so, set up Amazon EventBridge to trigger data transfer between Salesforce and DynamoDB based on specific events.

(Optional) If you want to enable authorized business users to access the integration, you can do so by adding them as users in the AWS Management Console or by granting them access to the relevant resources through IAM policies.

This will make the integration between Salesforce and DynamoDB available to authorized business users in production. You can monitor the progress of the data transfer and the status of the integration using the Amazon AppFlow and Amazon EventBridge pages in the AWS Management Console.

With AWS CLI :

To deploy the integration between Salesforce and DynamoDB to production using the AWS CLI, you will need to perform the following steps:

- Verify that the flow that you set up to transfer data between Salesforce and DynamoDB is active using the describe-flows command:

aws appflow describe-flows

This will make the integration between Salesforce and DynamoDB available to authorized business users in production. You can monitor the progress of the data transfer and the status of the integration using the describe-flow-execution-records and list-event-buses commands, respectively.

Conclusion

This is just a high-level overview of the process, and there may be additional steps or considerations depending on your specific needs and requirements. It is also worth noting that Amazon AppFlow and Amazon EventBridge are just two options for integrating Salesforce and DynamoDB – there are other approaches and tools that you could use as well.