Picture this scenario: you receive an email from your CEO, requesting some vital information.

As you read it, you’re struck by the uncanny resemblance to your CEO’s tone, language, and even her beloved pet dog mentioned in a light-hearted joke.

The email is flawless, accurate, and utterly convincing.

But here’s the twist: it was actually composed by generative AI, which cleverly utilized basic data gleaned from social media profiles by a cyber-criminal.

The introduction of OpenAI’s ChatGPT into the mainstream has propelled AI into the limelight, raising legitimate concerns about its impact on cyber defense.

Shortly after its launch, researchers swiftly demonstrated ChatGPT’s ability to craft phishing emails, develop malware code, and explain the intricacies of embedding malware into documents.

Adding to the intrigue, ChatGPT is neither the first nor the last chatbot to emerge. Technology giants like Google and Baidu have also thrown their hats into the ring. As these industry powerhouses vie for the ultimate generative AI supremacy, what does this mean for the future of cyber defense?

The AI and Generative AI Market

- Asia-Pacific is set to witness a remarkable surge in the generative AI market from 2022 to 2030, showcasing an impressive CAGR. This region is poised to embrace and leverage generative AI technologies, driving innovation and transformative solutions

- A key trend driving the demand for generative AI is the increasing use of AI-integrated solutions across multiple industries. This trend highlights the recognition of the immense value and potential that generative AI brings to various verticals, driving the adoption and implementation of these solutions

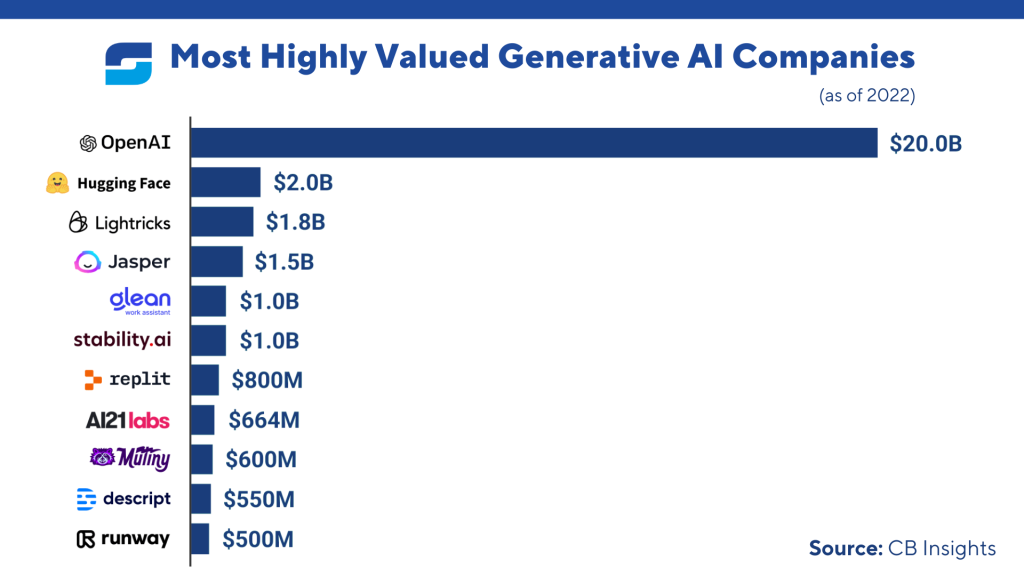

- Several prominent players are actively participating in the generative AI market worldwide. Notable companies such as Microsoft Corporation, Hewlett-Packard Enterprise Development, Oracle Corporation, NVIDIA, and Google

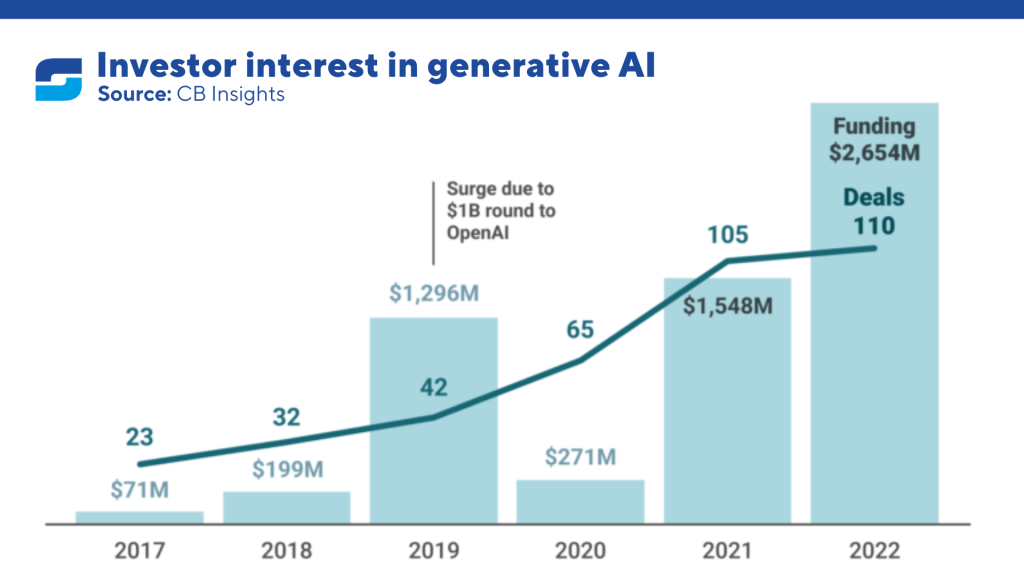

- VC firms invested $1.7B+ in generative AI over three years, with AI drug discovery & software coding receiving the most funding

- Experts in the AI market predict that more cloud service providers will incorporate AI into their offerings, recognizing its long-term potential and widespread use. With the advantages of IT modernization through low-code and no-code tools, which cost 70% less and can be implemented in as little as three days, 66% of developers are already utilizing or planning to utilize these tools in 2023are making significant contributions to the advancement of generative AI technologies

Lowering the Barriers: Is it a reality yet?

One of the earliest debates sparked by ChatGPT centered around cyber security:

- Could cyber-criminals leverage ChatGPT or other generative AI tools to enhance their attack campaigns?

- Does this technology make it easier for aspiring threat actors to join the fray?

Without a doubt, ChatGPT is a formidable tool with a vast range of potential use cases. It enables existing users to streamline their workflows, aggregate knowledge, and automate routine tasks in an era defined by rapid digital transformation.

However, generative AI is far from being the mythical silver bullet that effortlessly resolves all challenges at once.

It possesses certain limitations.

Firstly, it can only work based on the data it has been trained on and requires continuous retraining.

Moreover, concerns have already emerged regarding the reliability of the data used to train generative AI. Academic institutions and media outlets have flagged worries about AI-assisted plagiarism and the propagation of misinformation. Consequently, people often need to verify the output of generative AI since it’s sometimes challenging to discern whether the content was fabricated or based on unreliable information.

The same holds true for the application of generative AI in the realm of cyber-threats.

If a criminal intends to create malware, they would still need to guide ChatGPT through the process and thoroughly check the efficacy of the resulting malware.

Effectively leveraging this technology for malicious purposes still requires a substantial amount of pre-existing knowledge about attack campaigns. Therefore, it’s safe to say that the bar for technical attacks hasn’t significantly lowered just yet. However, nuances remain, such as the creation of credible phishing emails.

Quality Trumps Quantity in Generative AI-Powered Attacks

While the number of email-based attacks has largely remained constant since ChatGPT’s arrival, we observed a decline in phishing emails that rely on tricking recipients into clicking malicious links. However, the interesting twist is that the average linguistic complexity of these phishing emails has increased.

Although correlation doesn’t imply causation, one theory proposed is that ChatGPT allows cyber-criminals to shift their focus.

Rather than relying on email attacks with embedded malware or malicious links, criminals are now finding greater success in engineering sophisticated scams that exploit trust and prompt users to take direct action.

For example: they may urge the Human Resources department to modify the CEO’s salary payment details, redirecting the funds to an attacker-controlled money mule.

Consider the initial hypothetical situation: a criminal can effortlessly scrape information about a potential victim from their social media profiles and swiftly generate a highly credible, contextually appropriate spear-phishing email using ChatGPT. In mere moments, armed with this compelling and well-written message, the criminal can launch their attack.

Machines Engaged in Battle: The Future of Cyber Defense

The ongoing race for generative AI dominance will drive tech giants to release the most accurate, fastest, and reliable AI solutions on the market.

Simultaneously, it’s inevitable that cyber-criminals will exploit these innovations for their own nefarious purposes. The introduction of AI, including deepfake audio and video, into the threat landscape simplifies the process of launching personalized attacks that scale rapidly and yield greater success.

To safeguard employees, infrastructure, and intellectual property, defenders MUST embrace AI-powered cyber defense.

The self-learning AI systems available today excel at identifying and neutralizing subtle attacks by deeply understanding the users and devices within the organizations they protect. By learning the patterns of everyday business data, these AI systems develop a comprehensive understanding of what constitutes normal behavior within the real-world context.

In essence, the key to thwarting hyper-personalized, AI-powered attacks lies in having an AI that possesses a more profound knowledge of your business than any external generative AI ever could.

In this ever-evolving landscape, the battle lines are drawn, and it’s machines fighting machines.